Recently, Professor Zhai Guangtao’s research team from the School of Integrated Circuits (School of Information and Electronic Engineering), Shanghai Jiao Tong University, used functional magnetic resonance imaging (fMRI) technology to reveal for the first time the neural mechanism of visual quality perception in the human brain, as well as the neural compensation mechanism when processing low-quality images.The study found that low-level visual regions are sensitive to image quality, while high-level regions can “fill in” the missing information to maintain semantic understanding. This achievement not only deepens the understanding of the human visual system but also provides biological inspiration for developing more robust image quality assessment algorithms.The research results, titled “Neural Mechanisms of Visual Quality Perception and Adaptability in the Visual Pathway”, were published in Patterns, a sub-journal of Cell.

Research Background

We look at images every day: scrolling through our phones, watching videos, browsing AI-generated content. But how does the brain judge whether an image looks “good,” “clear,” or “real”? In today’s world—where video streaming, social media, and AI-generated content are increasingly widespread—visual quality assessment has become a key technology for optimizing user experience. However, most existing quality assessment models rely heavily on large amounts of labeled data and lack a deep understanding of the human brain’s perception mechanisms. In particular, when processing low-quality, blurry, or noisy images, the human brain can still recognize the content, while existing AI models perform poorly. These issues highlight the urgent need for theoretical support from neuroscience in the field of visual quality assessment.

Research Content

窗体底端

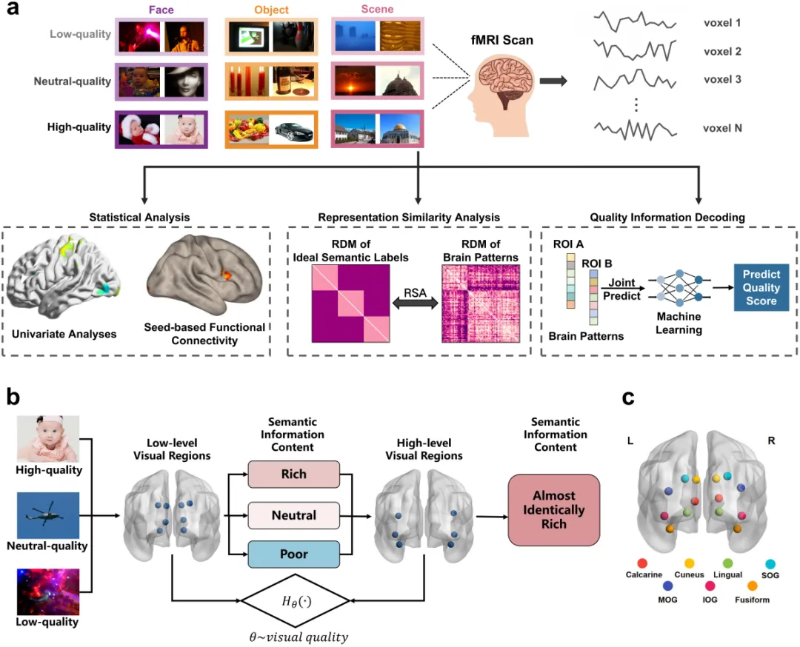

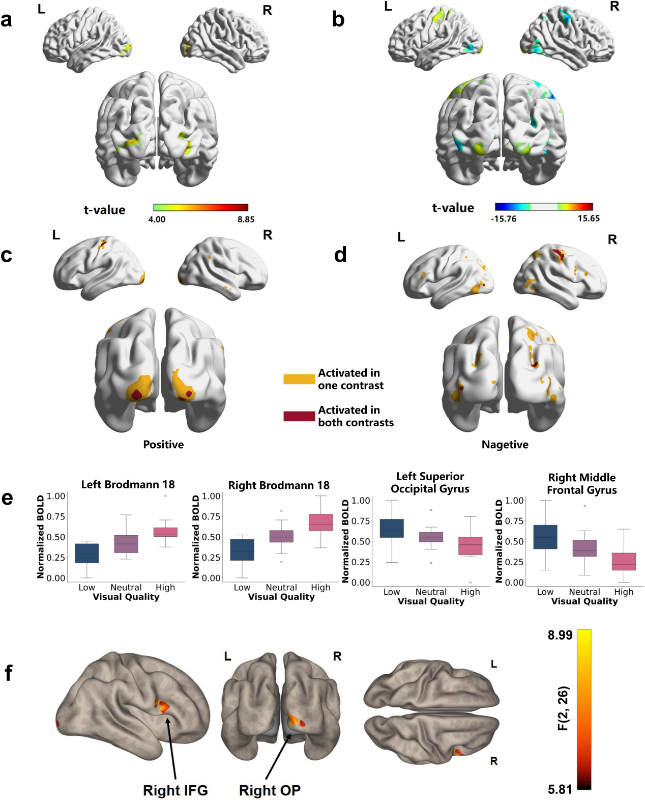

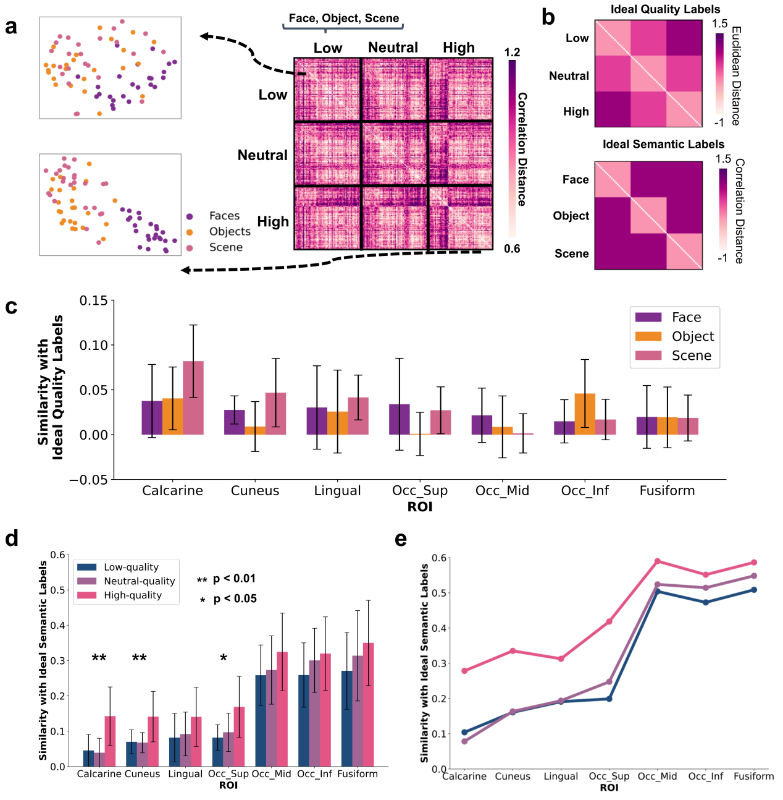

A research team led by Professor Guangtao Zhai from Shanghai Jiao Tong University, in collaboration with Renji Hospital, conducted an fMRI-based study using a reference-free experimental paradigm. Participants were asked to view images of varying quality levels (high, medium, and low) and different content types (faces, objects, and scenes), and to perform either image quality assessment or content classification tasks. Based on the collected fMRI data, the team applied univariate statistical analysis to identify brain regions sensitive to image quality, functional connectivity analysis to explore the coordination between brain areas, and representational similarity analysis to quantify the encoding strength of semantic information across regions. They also built inter-regional response pattern prediction models to uncover how visual information is transmitted along the visual pathway. Finally, given the established similarities between convolutional neural networks (CNNs) and the human visual cortex, the team validated their neuroscientific findings across multiple CNN architectures.

Low-level visual areas are sensitive to image quality, while high-level areas remain stable

Semantic information in early visual regions (such as the lingual gyrus and cuneus) decreased significantly as image quality declined—dropping to as low as 35.2% of that observed under high-quality conditions—whereas higher-level regions (such as the fusiform gyrus and middle occipital gyrus) were barely affected.

Visual quality information is not encoded by a single brain region, but emerges from inter-regional differences

The study found that no individual brain region specifically encodes “image quality.” Instead, the perception of quality emerges from the information differences between low-level and high-level visual regions.

fMRI-based decoding and AI model validation

The team successfully decoded image quality information from fMRI signals, and inspired by these findings, proposed a multi-layer feature fusion strategy. This approach significantly improved image quality assessment performance on classical networks such as ResNet, achieving a 14.29% performance increase on the BID dataset.

Future research will further explore how different types of image distortions affect brain responses, particularly the brain’s adaptive mechanisms when processing common visual degradations such as blur, noise, and compression. Moreover, as dataset size grows and visual quality categories become more refined, this study provides a solid theoretical foundation for developing more accurate and robust visual quality assessment algorithms, and offers new insights for building adaptive AI vision models capable of “intelligently restoring” low-quality images—much like the human brain does.

Publication Information

The paper was co-first-authored by Yiming Zhang, a Ph.D. student at the School of Integrated Circuits (School of Electronic Information and Electrical Engineering), Shanghai Jiao Tong University, and Dr. Yitong Chen, Assistant Professor at the same school. Associate Professor Xiongkuo Min and Professor Guangtao Zhai from the School of Integrated Circuits (School of Electronic Information and Electrical Engineering), Shanghai Jiao Tong University, served as corresponding authors.

This research was supported by the National Natural Science Foundation of China, the National Key R&D Program of China, and received assistance from Renji Hospital, affiliated with Shanghai Jiao Tong University, for fMRI data collection.

Dr. Yitong Chen, Assistant Professor and co-first author of the paper, has published multiple research papers in top international journals and conferences, including Nature, Science, Nature Photonics, Nature Electronics, and Science Advances. He was the first in the world to achieve complex visual intelligence tasks using a photonic–electronic intelligent computing chip, demonstrating an end-to-end processing speed 3,600 times faster and energy efficiency one million times higher than leading international chips. His research has been featured by major media outlets such as Xinhua News Agency, People’s Daily, Guangming Daily, and Science and Technology Daily, and was selected as one of the Outstanding Funded Achievements by the National Natural Science Foundation of China (NSFC).

Associate Professor Xiongkuo Min mainly focuses on multimedia signal processing. He has led several research projects, including the National Natural Science Foundation of China (NSFC) Youth Category B and General Program. He was selected for the National Postdoctoral Innovative Talent Program and the Shanghai Pujiang Talent Program.He has received numerous national awards such as the Wu Wenjun Artificial Intelligence Youth Science and Technology Award and the Excellent Doctoral Dissertation Award of the Chinese Institute of Electronics, as well as international honors including the IEEE TBC Best Paper Award and the IEEE TMM Best Paper Nomination. He has also won championships in international competitions such as CVPR and ECCV, published over 100 papers in IEEE/ACM Transactions and CCF-A ranked journals and conferences, with over 10,000 citations on Google Scholar, and serves as an editorial board member for journals including ACM TOMM.

Professor Guangtao Zhai is a Distinguished Professor at Shanghai Jiao Tong University, a Jointly Appointed Scientist at the Shanghai Artificial Intelligence Laboratory, an IEEE Fellow, and a national-level high-caliber talent. He has conducted long-term research in multimedia intelligence and has been recognized as a Clarivate Highly Cited Researcher globally. Professor Zhai has received over 30 international awards, including the Best Paper Awards from IEEE Transactions on Multimedia and IEEE Transactions on Broadcasting.

Professor Zhai’s research group focuses on multimedia signal processing, perceptual quality assessment, multimodal intelligent agents, and data benchmarking. Their application areas span multimedia, AI-generated content, virtual/augmented/extended reality (VR/AR/XR), the metaverse, and smart healthcare.

Paper link: https://www.cell.com/patterns/pdf/S2666-3899(25)00216-8.pdf

Author: School of Integrated Circuits (School of Electronic Information and Electrical Engineering)

Contributing Unit: School of Integrated Circuits (School of Electronic Information and Electrical Engineering)